Nvidia’s VMware-optimized AI software stack offers a strong alternative to doing machine learning in the AWS, Azure, and Google clouds. Nvidia LaunchPad lets you try it out for free.

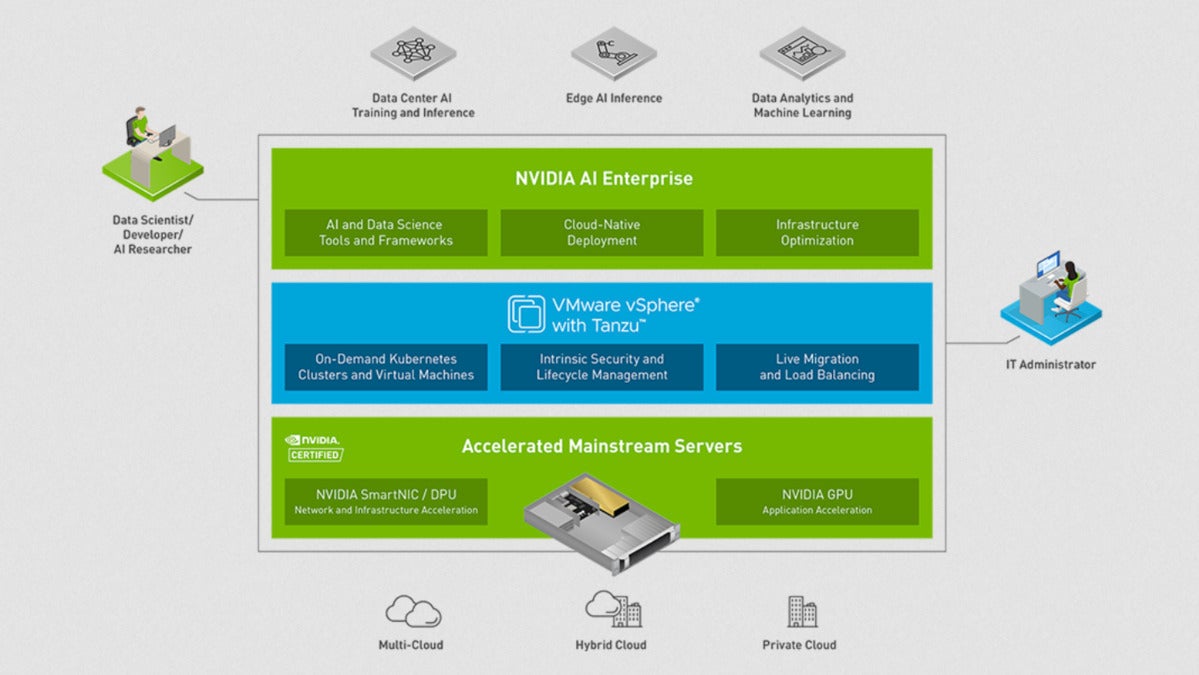

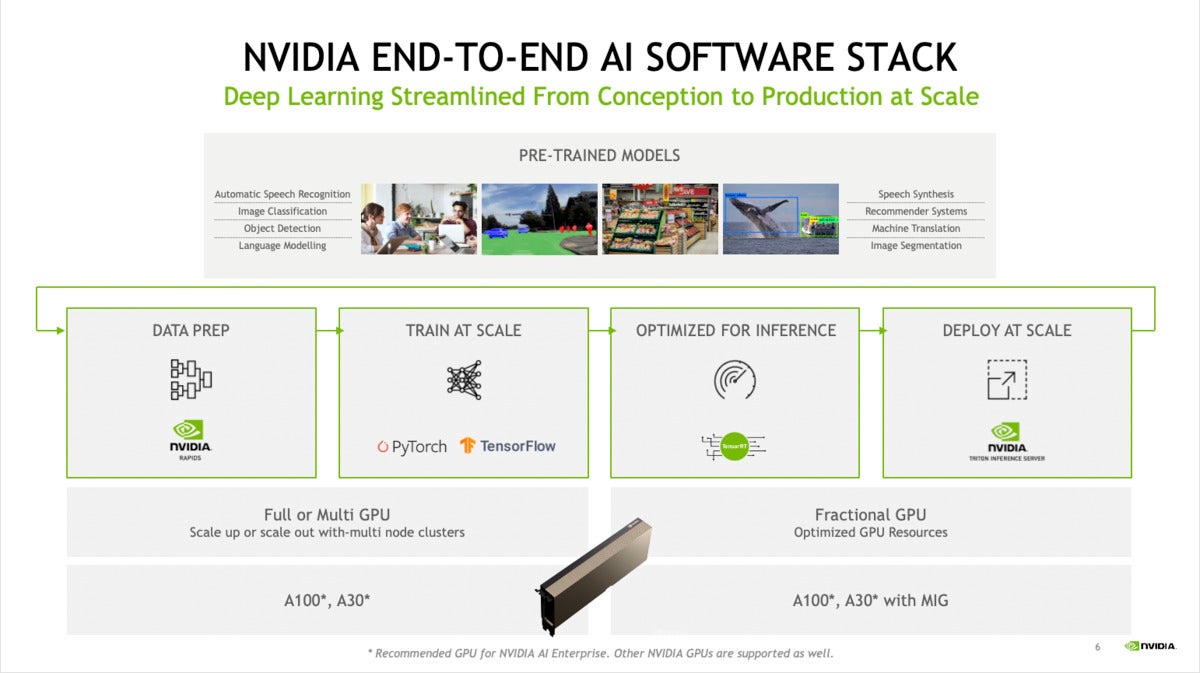

Nvidia AI Enterprise is an end-to-end AI software stack. It includes software to clean data and prepare it for training, perform the training of neural networks, convert the model to a more efficient form for inference, and deploy it to an inference server.

In addition, the Nvidia AI software suite includes GPU, DPU (data processing unit), and accelerated network support for Kubernetes (the cloud-native deployment layer on the diagram below), and optimized support for shared devices on VMware vSphere with Tanzu. Tanzu Basic lets you run and manage Kubernetes in vSphere. (VMware Tanzu Labs is the new name for Pivotal Labs.)

Nvidia LaunchPad is a trial program that gives AI and data science teams short-term access to the complete Nvidia AI stack running on private compute infrastructure. Nvidia LaunchPad offers curated labs for Nvidia AI Enterprise, with access to Nvidia experts and training modules.

Nvidia AI Enterprise is an attempt to take AI model training and deployment out of the realm of academic research and of the biggest tech companies, which already have PhD-level data scientists and data centers full of GPUs, and into the realm of ordinary enterprises that need to apply AI for operations, product development, marketing, HR, and other areas. LaunchPad is a free way for those companies to let their IT administrators and AI practitioners gain hands-on experience with the Nvidia AI Enterprise stack on supported hardware.

The most common alternative to Nvidia AI Enterprise and LaunchPad is to use the GPUs (and other model training accelerators, such as TPUs and FPGAs) and AI software available from the hyperscale cloud providers, combined with the courses, models, and labs supplied by the cloud vendors and the AI framework open source communities.

What’s in Nvidia AI Enterprise

Nvidia AI Enterprise provides an integrated infrastructure layer for the development and deployment of AI solutions. It includes pre-trained models, GPU-aware software for data prep (RAPIDS), GPU-aware deep learning frameworks such as TensorFlow and PyTorch, software to convert models to a more efficient form for inference (TensorRT), and a scalable inference server (Triton).

A library of pre-trained models is available through Nvidia’s NGC catalog for use with the Nvidia AI Enterprise software suite; these models can be fine-tuned on your datasets using Nvidia AI Enterprise TensorFlow Containers, for example. The deep learning frameworks supplied, while based on their open source versions, have been optimized for Nvidia GPUs.

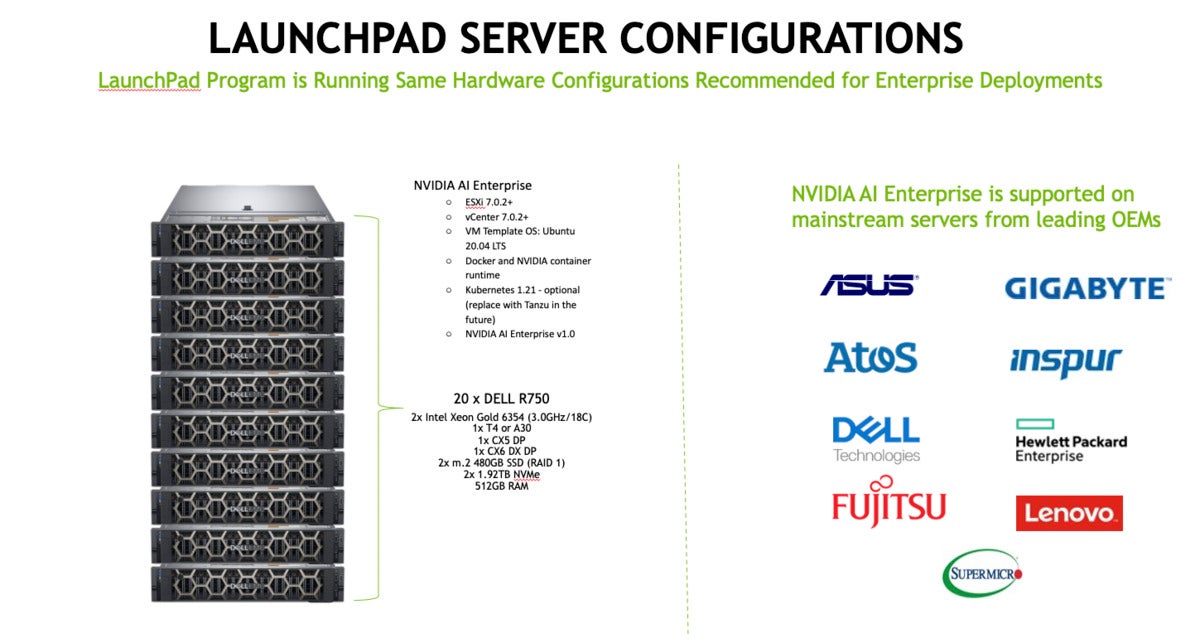

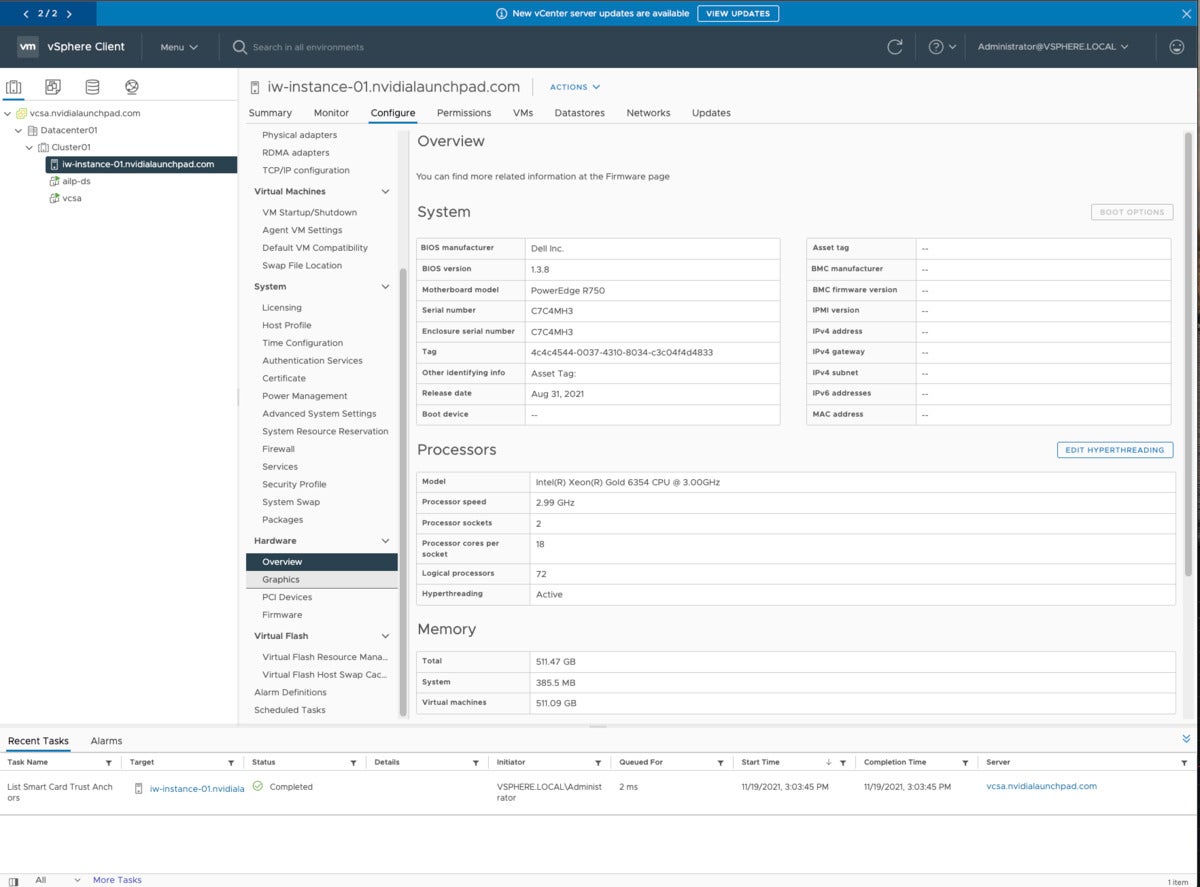

Nvidia AI Enterprise and LaunchPad hardware

Nvidia has been making a lot of noise about DGX systems, which have four to 16 A100 GPUs in various form factors, ranging from a tower workgroup appliance to rack-based systems designed for use in data centers. While the company is still committed to DGX for large installations, for the purposes of Nvidia AI Enterprise trials under the LaunchPad programs, the company has assembled smaller 1U to 2U rack-mounted systems with commodity servers based on dual Intel Xeon Gold 6354 CPUs, single Nvidia T4 or A30 GPUs, and Nvidia DPUs (data processing units). Nine Equinix Metal regions worldwide each have 20 such rack-mounted servers for use by Nvidia customers who qualify for LaunchPad trials.

Nvidia recommends the same systems for enterprise deployments of Nvidia AI Enterprise. These systems are available for rent or lease in addition to purchase.

Test driving Nvidia AI Enterprise

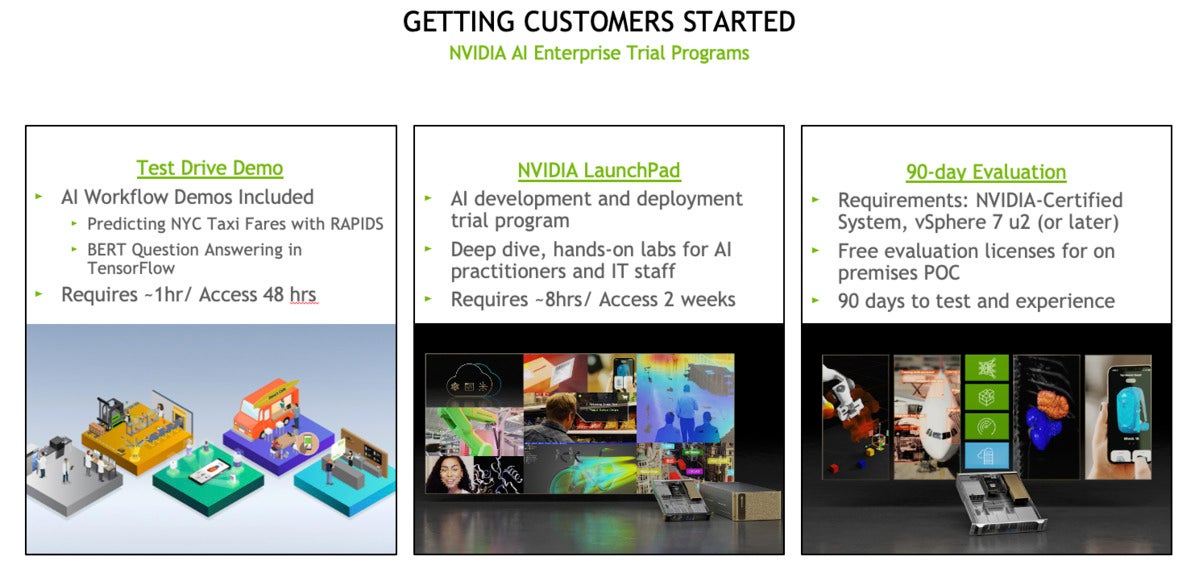

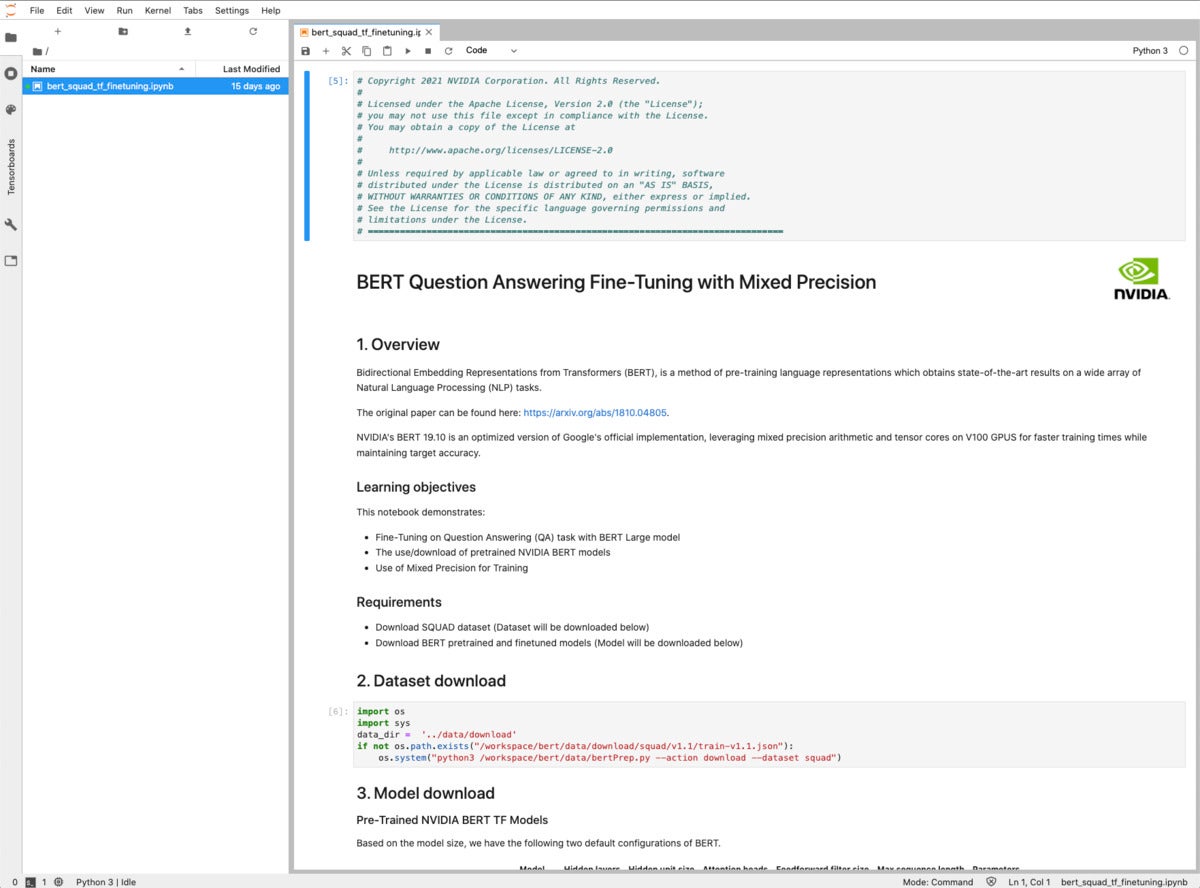

Nvidia offers three different trial programs to help customers get started with Nvidia AI Enterprise. For AI practitioners who just want to get their feet wet, there’s a test drive demo that includes predicting New York City taxi fares and trying BERT question answering in TensorFlow. The test drive requires about an hour of hands-on work, and offers 48 hours of access.

LaunchPad is slightly more extensive. It offers hands-on labs for AI practitioners and IT staff, requiring about eight hours of hands-on work, with access to the systems for two weeks, with an optional extension to four weeks.

The third trial program is a 90-day on-premises evaluation, sufficient to perform a POC (proof of concept). The customer needs to supply (or rent) an Nvidia-certified system with VMware vSphere 7 u2 (or later), and Nvidia provides free evaluation licenses.

Coventry University is a truly global university with more than 13,000 international students from over 150 countries. And with campuses in Coventry and London, you can choose the city location…

Nvidia LaunchPad demo for IT administrators

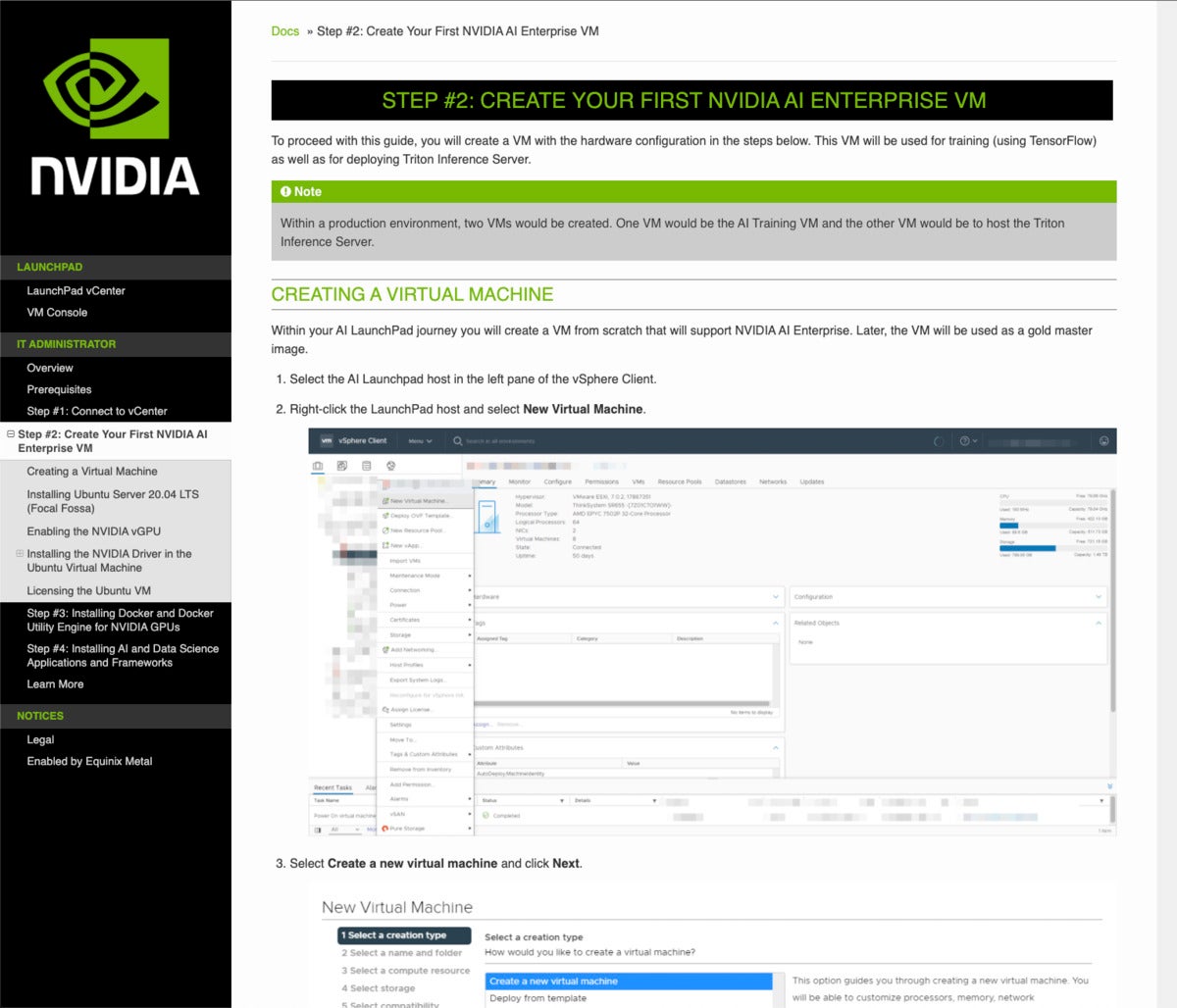

As I’m more interested in data science than I am in IT administration, I merely watched a demo of the hands-on administration lab, although I had access to it later. The first screenshot below shows the beginning of the lab instructions; the second shows a page from the VMware vSphere client web interface. According to Nvidia, most of the IT admins they train are already familiar with vSphere and Windows, but are less familiar with Ubuntu Linux.

Launchpad lab for AI practitioners

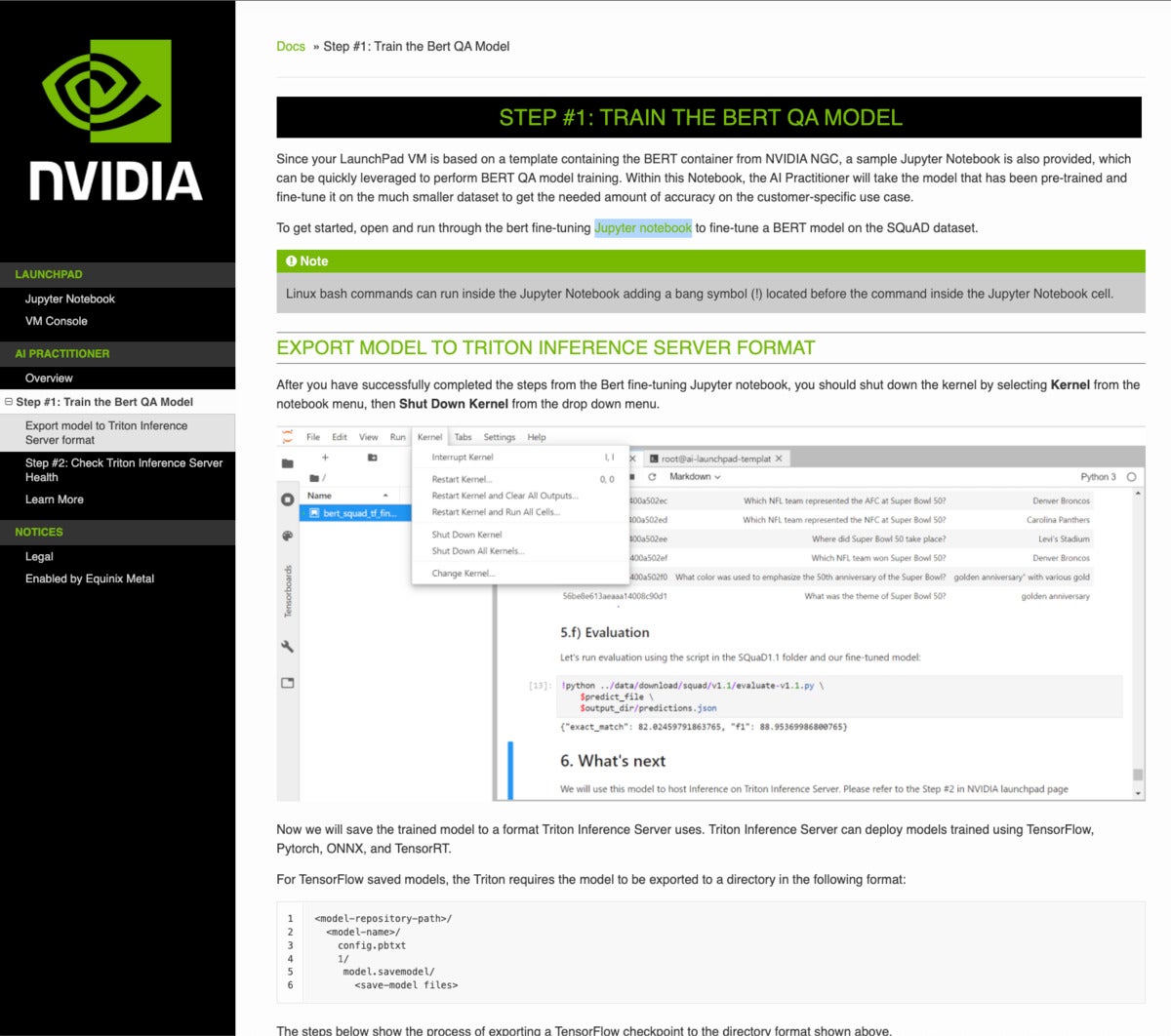

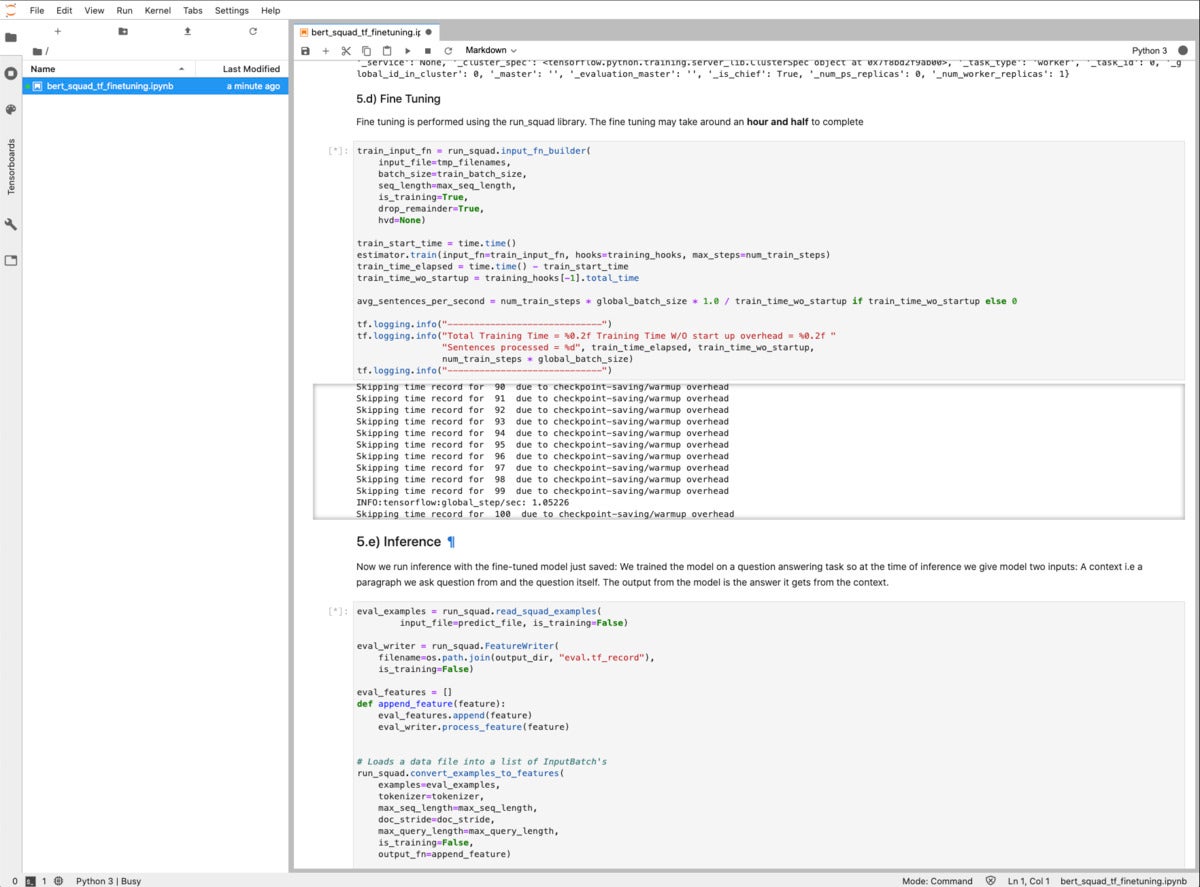

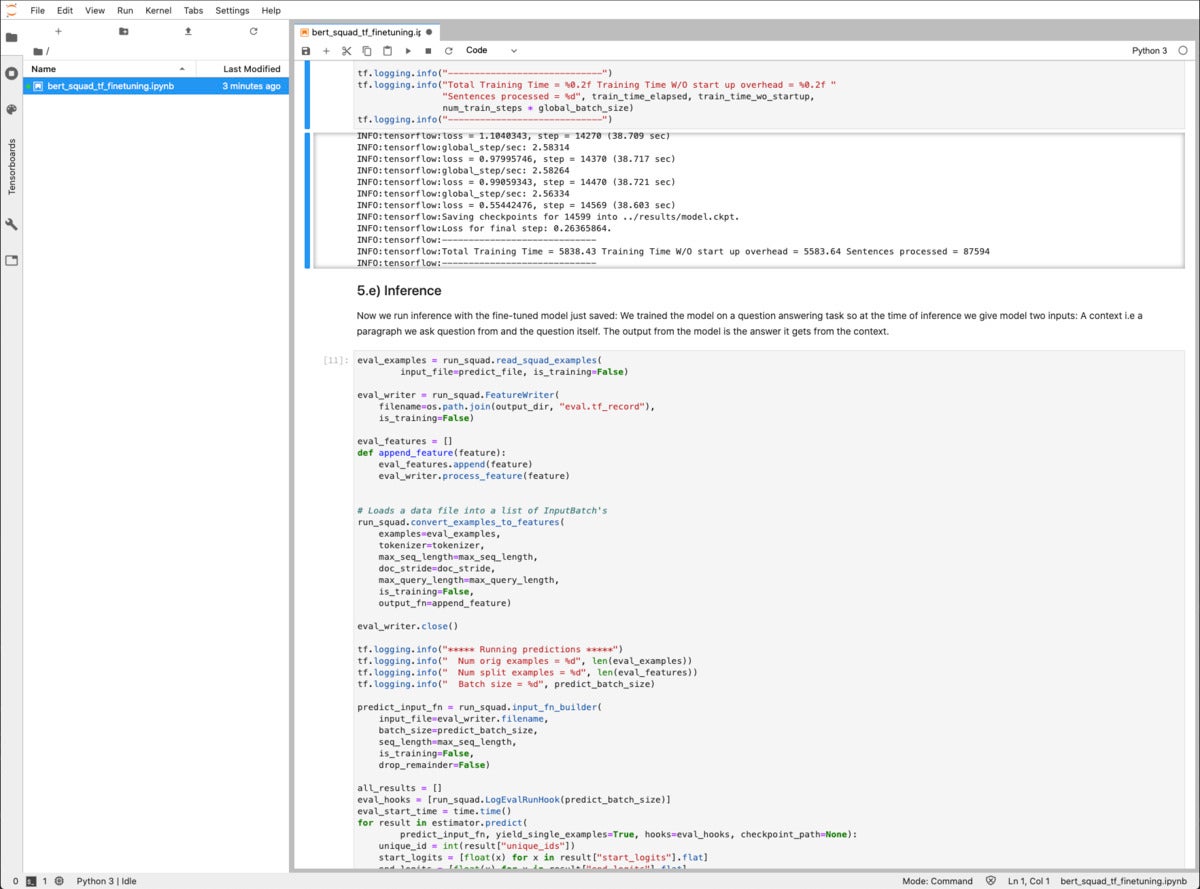

I spent most of a day going through the LaunchPad lab for AI practitioners, delivered primarily as a Jupyter Notebook. The folks at Nvidia told me it was a 400-level tutorial; it certainly would have been if I had to write the code myself. As it was, all the code was already written, there was a trained base BERT model to fine-tune, and all the training and test data for fine-tuning was supplied from SQuAD (Stanford Question Answering Dataset).

The A30 GPU in the server supplied for the LaunchPad got a workout when I got to the fine-tuning step, which took 97 minutes. Without the GPU, it would have taken much longer. To train the BERT model from scratch on, say, the contents of Wikipedia, is a major undertaking requiring many GPUs and a long time (probably weeks).

Overall, Nvidia AI Enterprise is a very good hardware/software package for tackling AI problems, and LaunchPad is a convenient way to become familiar with Nvidia AI Enterprise. I was struck by how well the deep learning software takes advantage of the latest innovations in Nvidia Ampere architecture GPUs, such as mixed precision arithmetic and tensor cores. I noticed how much better the experience was trying the Nvidia AI Enterprise hands-on labs on Nvidia’s server instance than other experiences I’ve had running TensorFlow and PyTorch samples on my own hardware and on cloud VMs and AI services.

All of the major public clouds offer access to Nvidia GPUs, as well as to TPUs (Google), FPGAs (Azure), and customized accelerators such as Habana Gaudi chips for training (on AWS EC2 DL1 instances) and AWS Inferentia chips for inference (on Amazon EC2 Inf1 instances). You can even access TPUs and GPUs for free in Google Colab. The cloud providers also have versions of TensorFlow, PyTorch, and other frameworks that are optimized for their clouds.

Assuming that you are able to access Nvidia LaunchPad for Nvidia AI Enterprise and test it successfully, your next step if you want to proceed should most likely be to set up a proof of concept for an AI application that has a high value to your company, with management buy-in and support. You could rent a small Nvidia-certified server with an Ampere-class GPU and take advantage of Nvidia’s free 90-day evaluation license for Nvidia AI Enterprise to accomplish the POC with minimum cost and risk.

Pros

- Uses latest Nvidia GPUs

- 1U to 2U form factor server

- State-of-the-art AI software

- Good hardware-software integration

- Good hands-on labs

- Free for two weeks

Cons

- Only free for two weeks, with a possible two-week extension

- Will tend to lock you into the Nvidia ecosystem